I’ve been talking with many professionals about AI in the last few months. There are some issues that come up over and over in these conversations: Environmental impact, copyright infringements, poor results, etc. Some people don’t want to use AI at all because of these issues, especially in the nonprofit sector.

I agree that these issues exist, but I also think some potential problems have been exaggerated or can be reduced a lot if we use AI “properly”. So I want to provide data and solutions.

Let’s discuss the main issues about AI* :

*️⃣ Note

We will focus here mostly on GenAI, since it’s the most popular and “problematic” type of AI right now. But it’s important to note that AI is not only GenAI, LLMs or ChatGPT. There are many types of AI (some of them have been used for decades now and doesn’t have the same issues). Maybe in a few years we won’t even use GPTs or LLMs anymore because we find better AI solutions.

Poor results

This is probably the most important point. If AI gives bad results, then everything else doesn’t really matter, nobody should use AI (even if it had no other issues).

But many studies are showing great results using AI (and most of them were using old AI models, now we have better models that should give even better results):

- Workers using AI tripled their productivity (doing in 30 minutes tasks that would have taken them about 90 minutes on average without AI).

- Using generative AI improves employee productivity by 66% on average.

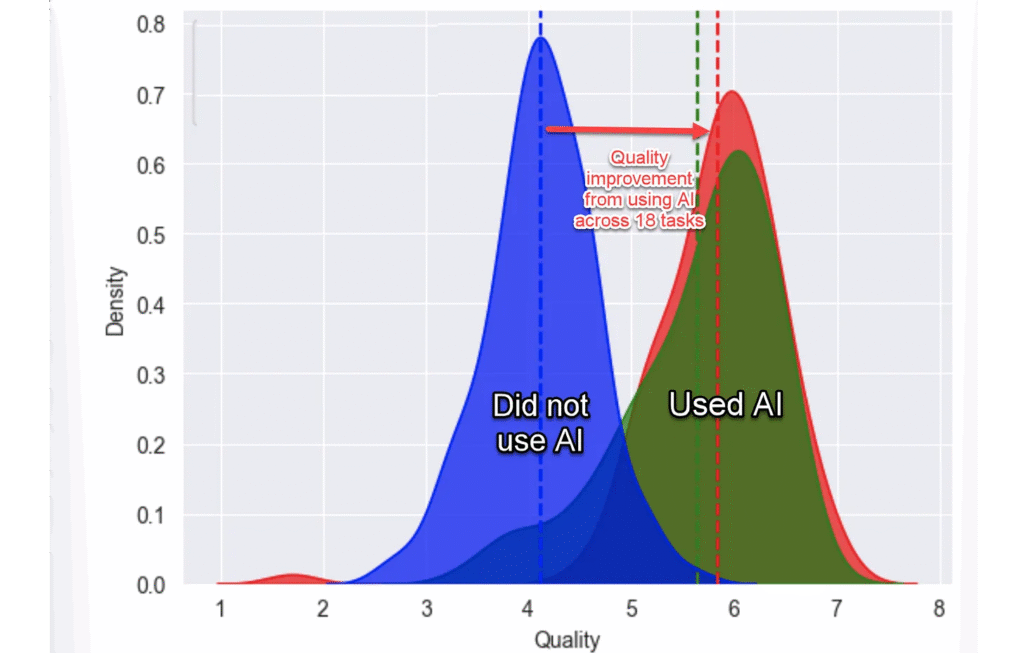

- Top-tier consultants (BCG) using AI finished 12.2% more tasks on average and produced 40% higher quality results than those without.

Another data point: ChatGPT has +400 million active users. So we can assume that millions of people are achieving good results, at least for some tasks (if they only saw poor results, they would not use it anymore).

Also, many big companies are investing billions in AI, much more than they did in anything else before (not even the Internet), so unless we think they are just dumb or want to lose billions for no reason, we should assume they are seeing a huge potential to increase results, productivity, innovation, etc.

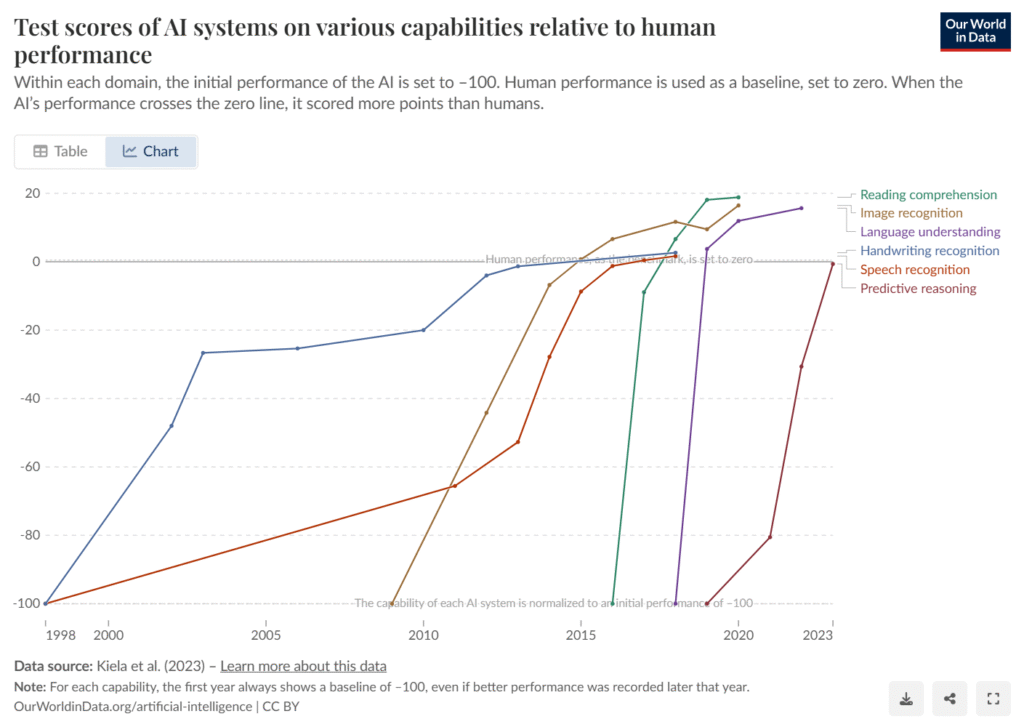

There are certainly some tasks where AI doesn’t deliver good results (yet). But also many tasks where AI is already better than humans (or at least much faster + similar results). It’s not easy to predict the “winners” beforehand, because AI doesn’t think or behave like us. We have to try different tasks, tools and prompts. Some experts call this the “jagged frontier” of AI.

Between 40% and 69% of nonprofit organizations have not received formal AI training at all. So probably many people reporting bad results don’t know how to use AI tools properly (which is normal, they are pretty new and there is a lot to learn). They are probably not using “prompt engineering” techniques, advanced features and settings, specialized tools instead of ChatGPT for everything, etc. So it’s not just a tool problem, but a people problem (most professionals haven’t received proper training yet).

For example, some people complain because ChatGPT responses are not always reliable (and that’s true, LLMs sometimes invent/hallucinate info), but that doesn’t make AI useless. You just have to know how to select and use different AI tools (e.g. Use AI tools designed for research like Perplexity or Deep Research when you need reliable facts and citations).

There are thousands of AI tools available now and there are also thousands of possible uses of AI. So if you only tried ChatGPT for a couple of tasks, you have tried less than 1% of AI “opportunities”. Maybe it’s time to explore the other 99%.

Environmental impact

The AI industry is in hyper-growth mode and that has a big environmental impact. No doubt about it. Big data centers with thousands of powerful chips consume a lot of energy (not 100% renewable in most cases), water (although this might change in the future) and materials.

But making a responsible use of AI is probably not as bad for the environment as many people think.

There are 2 main steps regarding AI models: Training and running them (also called “inference”).

1) Training new AI models (especially frontier LLMs) consumes a lot of energy, but for popular models that training “cost” will be divided between millions of users. And a trained model can in theory be used forever (especially true for open source models). So the impact per use might not be very big in the long term for some of the current AI models.

Also, AI companies are probably going to keep training new models (at least for a few years) even if a lot of people refuse to use them. Among other things, because they are focused on winning the AI/ASI race, not so much on earning money right now. There are even AI companies with billions in funding that don’t even plan to launch any public product anytime soon, because they are focused on training a future ASI (Artificial Super Intelligence), not on current AI uses or models.

So we could argue that AI training costs are not directly linked with our individual use. If companies will train those models anyway (or have trained them already), we might as well use them.

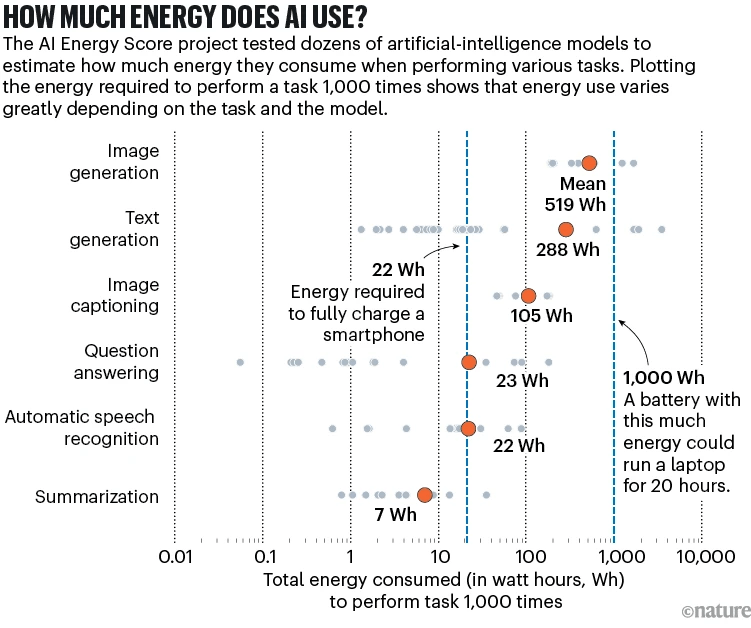

2) Running AI models can have a big energy cost (if we are doing complex tasks like creating long videos) or quite small (if we are doing simple things like asking short questions to small text models). It varies a lot depending on the task and model that you are using.

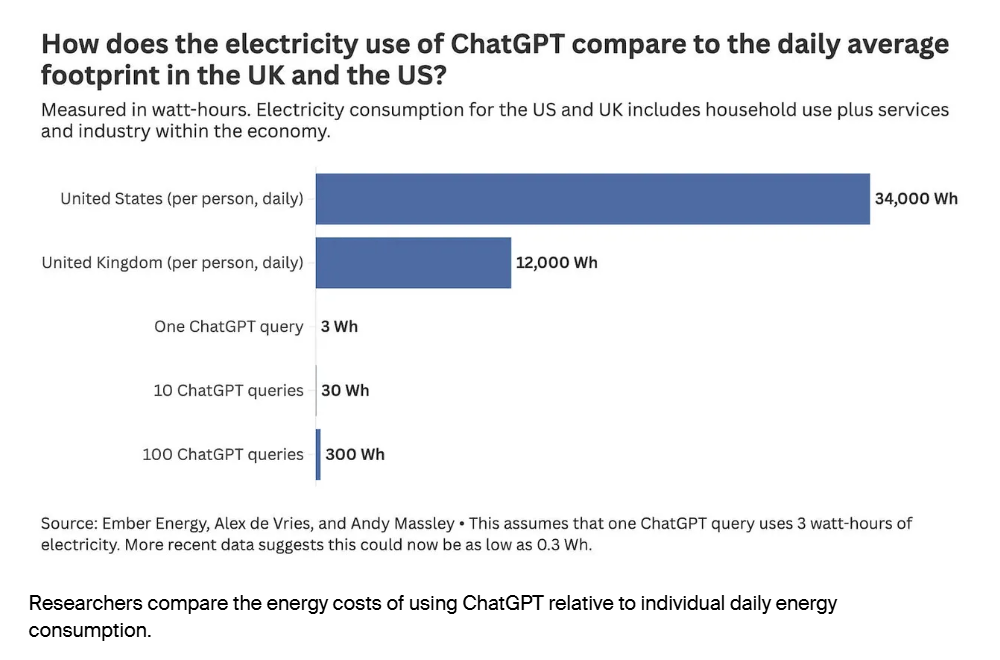

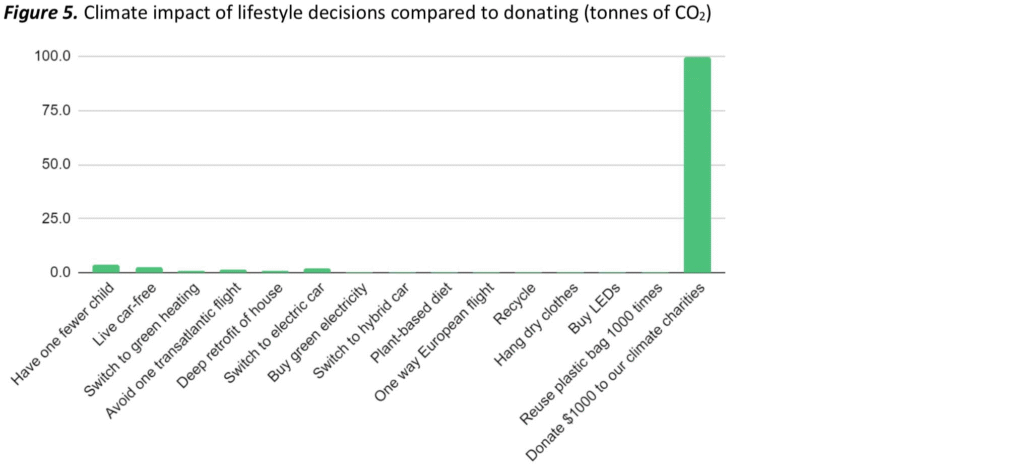

According to MIT, the energy needed for simple AI chatbot tasks can be around 114 joules per response. That’s very little energy. Your laptop probably consumes around 3,000 joules per minute (26x more). Watching 1 hour of Netflix might consume more than 200,000 joules (1,700x more). Your household might consume about 100,000,000 joules per day (800,000x more). So asking a few simple things to AI models makes almost no difference in your overall energy consumption (it’s probably less than 1% of your energy use).

1 hour of gaming with your PS5 or watching Youtube/Netflix might have more impact than 1 hour of using ChatGPT. And ChatGPT can be much more productive obviously. So instead of refusing to use AI, it may be more impactful to change other things in your life that may have 100x more environmental impact.

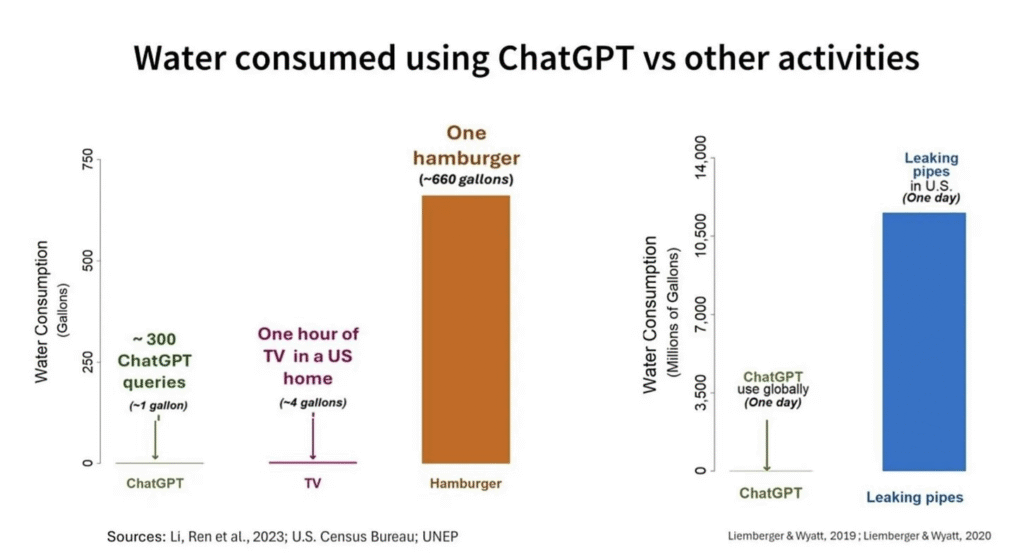

Regarding water, the picture is pretty similar (using ChatGPT for a few simple queries has almost no impact compared to other routines like eating a hamburger)

Using AI may even save energy in some cases. For example, if you get a good result from an AI chatbot in a few seconds instead of using your laptop for 20 minutes to get the same thing “manually” (without AI).

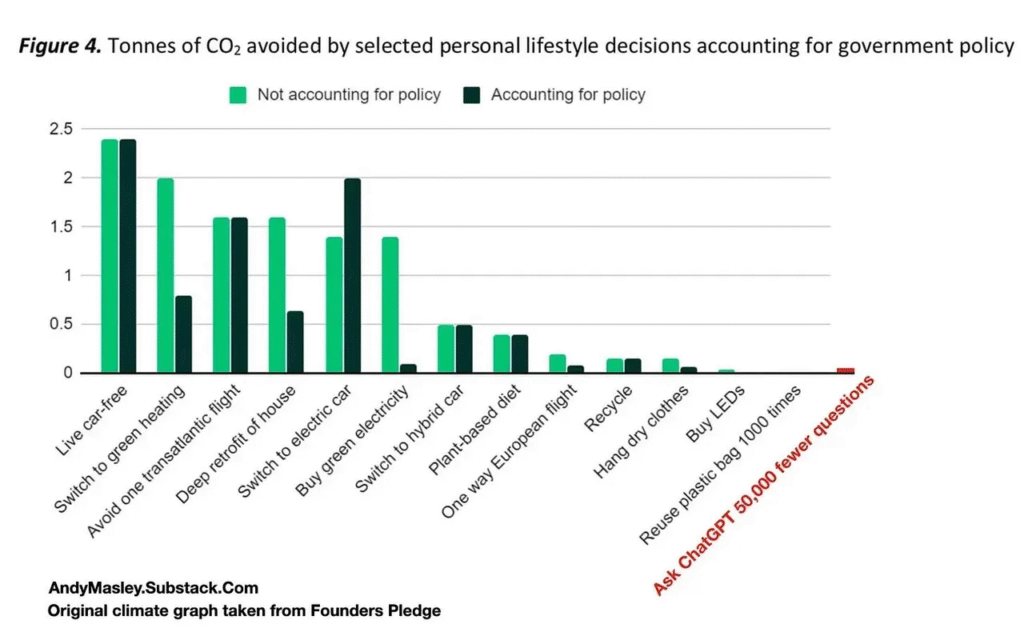

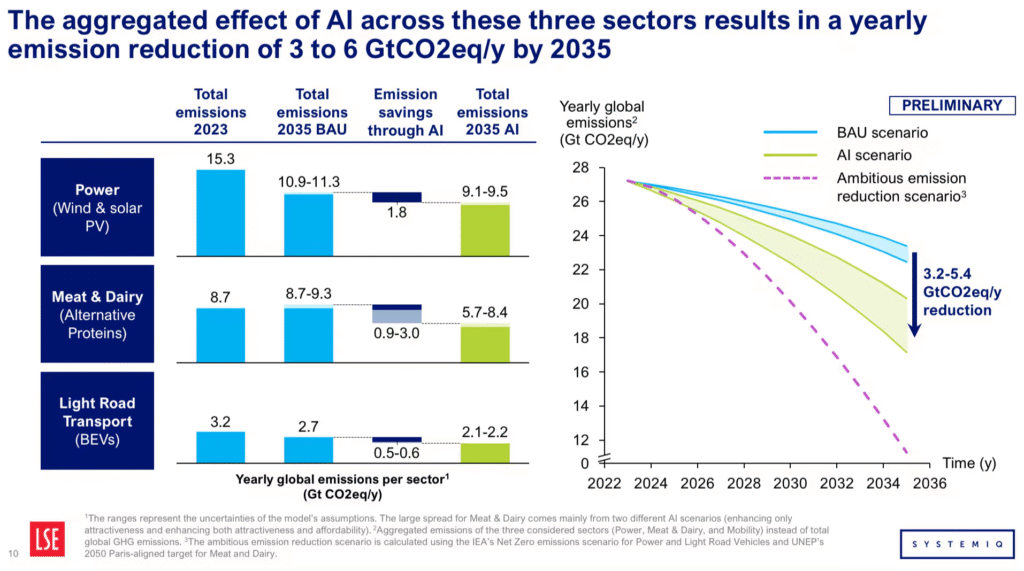

Also, some experts argue that AI might actually help us reduce our emissions in the next decade (by accelerating innovation, optimizing complex systems in key industries, etc.). So the overall impact of AI on the environment might become very positive, even if we consume significant resources to run AI systems. But for now that’s just based on long-term projections, not guaranteed at all.

It’s difficult to calculate the exact environmental impact of our individual AI use. There is not a lot of reliable data and dozens of variables to take into account (which prompt and model you are using, if you are using cloud AI or a model installed on your own computer, if you include the energy cost of AI training in your individual use or not, what is the non-AI solution that you would use instead for those tasks, if the results of your work may help the environment, etc.) .

But I think we can extract 4 main learnings:

1) We should consider the environmental impact of different AI uses and try to use AI only when the positive impact (on society, our organizations and/or ourselves) is bigger than the energy cost. For example, we should probably not create AI videos for jokes or other irrelevant stuff (big energy cost and zero positive impact). But if we can find simple AI tasks that save a lot of time or increase our results, I think we should go for it (small energy cost and positive impact on society).

2) We should learn more about AI, so we can use it more efficiently: Learn “prompt engineering” to avoid spending time & energy to get mediocre results, avoid using big AI reasoning models for easy tasks, share learnings with our colleagues instead of doing all the trial and error by ourselves, etc.

3) If you want to reduce your environmental impact to the minimum, you can install open-source AI models on your own computer (especially if you have one of these new laptops that are at the same time energy-efficient and powerful enough for AI workloads: Macbook M3-M4, Copilot+ PCs…). Open-source AI models are good enough for many tasks and you can even save energy by using them in some cases (compared to not using AI and spending many hours doing manual work on your computer). As an bonus, they can have some privacy and security benefits (since you are not sharing anything with third-party tools or cloud servers).

4) If you still feel bad about your AI use, donate to a climate charity or do carbon offsetting to compensate for it.

Copyright issues

AI models (especially LLMs) are trained using massive amounts of data & content. Most AI companies are not very transparent about which content they use exactly, but we can assume that a significant part of it is copyrighted content.

There is a big debate around this (including many courtroom cases). Some say it’s a huge copyright infringement. Others argue that training an AI model with copyrighted content is “fair use”, since it’s closer to learning and taking inspiration from other creators (like human artists and creators do all the time) than to copying specific content and reselling it.

Anyway, I think most of us understand why many creators are mad at AI companies using their content and giving nothing in return.

There are some solutions for creators who don’t want their content to be included in AI training (blocking AI scrapers with robots.txt and other tools, using protective tools like Glaze on their artwork…). But even if those solutions worked perfectly, getting zero compensation is not a great solution for many artists and creators anyway (some are earning much less than before the “AI era”).

As users, we can prioritize the use of ethically-trained AI models (using public domain and/or licensed content). There are a few of them (especially for image and audio generation), such as:

- Shutterstock AI: AI Image generator that ensures fair compensation for artist contributions to the training model.

- Blunge.ai: AI Image Generator that prevents AI-generated art theft.

- Models certified by the nonprofit organization Fairly Trained.

As far as I know, none of the big AI tools (ChatGPT, Google Gemini, Claude, etc.) can be considered ethically-trained right now.

If you want to use those AI tools but still support creators, you could do some sort of “compensation” by buying something from creators each month (art, digital products, memberships, services…) or donate to individual artists or associations. It would be something similar to carbon offsets but for creators impacted by AI.

I hope the AI industry comes up with a better solution (maybe forced by laws or courtroom decisions), but in the meantime…

Privacy & security issues

Some organizations are uploading lots of files (even their whole drives) to AI tools without being careful about it.

Most big AI tools are probably pretty secure, but almost anything that is online can get hacked (at the user level or the company/server level). There are also other possible privacy and security issues related to AI use (people having access to certain messages or files via AI that they should not have, data exfiltration via agents, etc.).

Also, some AI tools reserve the right to train their models with your messages/info, but they usually have an option to deactivate this and/or they don’t do that at all on paid accounts and API usage.

I think all organizations should give training to their staff to mitigate these risks. Just telling people to not use AI at all doesn’t work. Around 35% of people hide their AI use to their organizations/employers. So even if your organization forbids any AI use, the risks will still be there (or maybe even increase because they can’t share their concerns, security tips, etc.)

A lot of these risks can be avoided if we just don’t give sensitive information to AI (there are plenty of AI uses that don’t require that info).

If you need to use sensitive information, maybe use open-source AI models installed on your computer (e.g. Ollama or LM Studio) instead of the cloud services (e.g. ChatGPT). Or at least erase the sensitive files and conversations from those cloud AI services immediately after finishing them.

Impact on society (jobs, creativity, critical thinking, misinformation, bias…)

There are many uncertainties in the future, but also some things that we can’t take for sure:

1) AI will change the labor market. It will change, remove and add jobs. Professionals that don’t adapt to the changes may have trouble keeping and finding jobs in a few years. Professionals that adapt will probably thrive (apparently there is already a 56% wage premium for AI skills). If we achieve Artificial Super Intelligence (an AI that is better than humans at most tasks, which according to many experts may happen in a few years) it will probably lead to the most profound transformation in the labor market ever.

2) AI will have some positive and negative effects on society. It’s the same that already happened with every new powerful technology: Internet, smartphones, social media, etc. The impact depends on how we use these powerful tools. For example, AI can make students lazier, but it can also improve their learning performance and higher-order thinking. It can also open opportunities that were impossible without AI (such as having unlimited tutors to guide each student individually, anywhere in the world and almost for free).

3) There is no going back, we live in an AI world now. The potential benefits of Artificial Super Intelligence are too high, so all the big companies and governments will continue pushing for it. Even if social pressure manages to stop AI use or development in one country, the rest will continue the race. And even if all countries decide to forbid AI development or all AI companies go bankrupt (which is basically impossible), there are already dozens of great open-source models that we can install on our computers and use for free forever. AI will never go away.

So ethically you have basically 2 options:

A) You can refuse to use any AI. This would have a very small impact on society: Millions of people will continue using AI (including bad actors), companies will keep training new models, etc.

And it could have a very negative impact on your life: You may become “unemployable” in a few years. There is a high chance that we will reach the point where refusing to use any AI will be like saying you refuse to use Internet or social media for your work, just because those technologies have some negative effects on society. Many organizations will probably find it too extreme (and negative for their results & impact), so they will hire someone else.

B) You can use certain AI tools in a way that align with your principles and have a positive impact on society. Learn how to use AI tools responsibly, to generate positive effects (increase your creativity, productivity, social impact, etc.) and reduce the negative effects (use energy-efficient models and tools, reduce bias and misinformation with AI help, etc.).

While leveraging AI to “do good” on a personal level, you can pressure governments to take action on a global level (banning certain high-risk AI models and uses, better redistributing the benefits generated by AI and automation, protecting the rights of artists and other affected groups, etc.). This can be far more effective than simply refusing to use AI.

Conclusion

AI is powerful, but it’s just another tool. We should be conscious and careful when using it, but some AI uses can have a very positive effect (benefits much bigger than the risks and disadvantages). Refusing to use any AI can do more harm than good to your nonprofit and your positive impact on the world.

AI gives us an opportunity to increase our social impact. We can choose to take this opportunity or ignore it, but AI will change our society anyway.

Next steps

Get new “AI Superpowers” for your nonprofit. Improve results, save time, and avoid risks.

Receive expert help. AI questions? Request a free consultation!