Key AI risks for nonprofits & mitigation strategiesCopy

Now that we know many uses of AI, we should address the most important risks that AI could bring to nonprofit organizations and how to reduce those risks.

This chapter will guide you through the essential steps of identifying, mitigating, and managing these risks.

Why risk management is different (and specially important) for nonprofits

Nonprofit organizations face a unique set of challenges and considerations when it comes to AI. The stakes are often higher and the resources are often tighter:

- Reputation as a key asset: Nonprofits thrive on public trust and are usually held to a higher ethical standard than other organizations. AI-related missteps can damage your reputation and impact your funding, volunteer recruitment and other key areas. This requires a proactive and thoughtful approach to AI ethics, going beyond simply complying with legal requirements.

- Resource constraints: Most nonprofits don’t have the massive budgets and dedicated AI teams of large corporations. This means that your approach to risk management needs to be simple and cost-effective.

- Vulnerable populations: Many nonprofits work with vulnerable populations who may be disproportionately affected by AI biases and errors. This requires extra vigilance in ensuring fairness, accuracy, and accountability in your AI systems.

We will give some brief recommendations for risk management on the following sections, taking into account the special needs of nonprofit organizations.

Key AI Risks for nonprofits

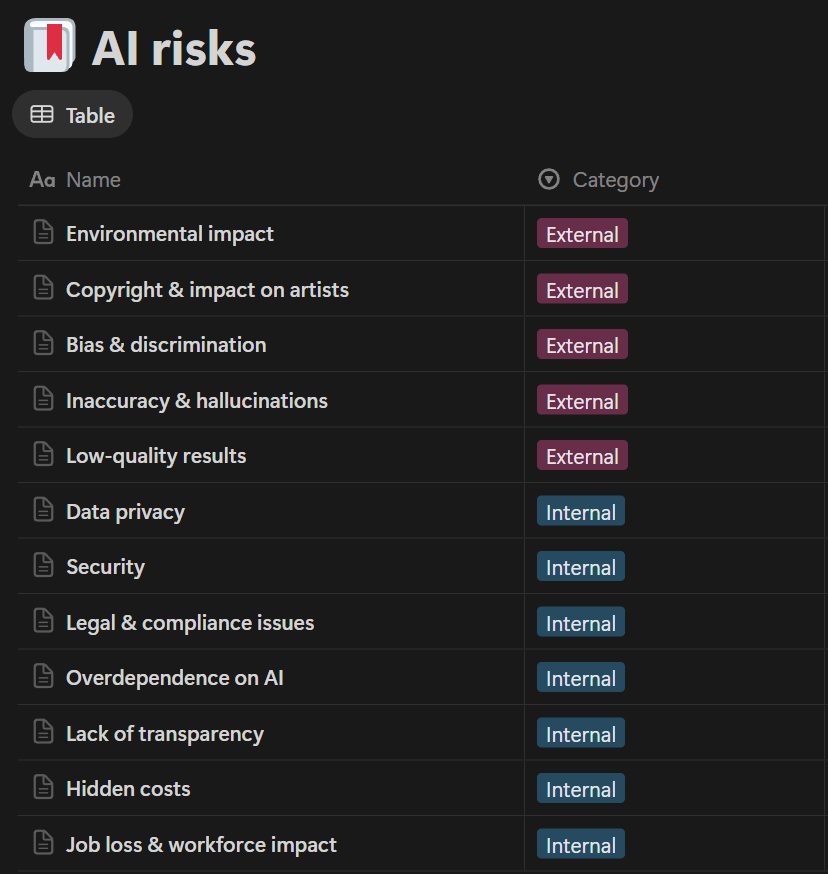

I have created a database with the risks that are more probable and impactful in the nonprofit context.

It includes many examples of how those risks can arise in common nonprofit tasks and useful tips to avoid or at least minimize those risks.

If you put your mouse over any name in the Name column, you will see an “OPEN” button. You have to click there to see all the details of each risk.

Prompts

You can use ChatGPT and similar tools (Google Gemini, Claude, etc.) to help you detect possible risks. Sometimes they give us ideas that we haven’t thought about. But always double-check the AI output, it might include irrelevant or even incorrect recommendations.

Remember that you can personalize the prompts (eg. mention if want to focus on specific topics, risks or tools) and give additional instructions according to the results you get (eg. “give me more details and examples about the risk X”).

You will see in yellow (starting with “>”) the lines where you should include your organization’s info. Feel free to add or remove lines if you think that will help you get better answers for your specific needs.

1. Identifying AI Risks for a Nonprofit:

You are an expert in AI risk assessment for nonprofit organizations.

I need your help to identify the top AI risks that our nonprofit should be aware of, considering our context explained below.

# Our context #

> Our mission:

> Our challenges:

> Our goals:

> Software that we use:

> Ethical considerations:

# Requirements #

Please explain the top 5 AI risks that are particularly significant for our organization and include the following info for each one:

1. Description

2. Why each risk is particularly relevant to our organization

3. Potential consequences or impacts of each risk.

4. Recommendations to mitigate each risk. 2. Addressing AI Bias and Errors with Vulnerable Populations:

You are an ethical AI consultant specializing in protecting vulnerable populations served by nonprofits.

I need your help to brainstorm scenarios where AI bias or errors could negatively impact our beneficiaries and develop mitigation strategies, considering our context explained below.

# Our Context #

> Our mission:

> Our challenges:

> Our goals:

> Software that we use:

> Ethical considerations:

> Vulnerable populations:

# Requirements #

1. Brainstorm 5 scenarios where AI bias or errors could have a disproportionately negative impact on our beneficiaries.

2. Develop specific mitigation strategies for each scenario, focusing on fairness, transparency, and accountability.

3. Evaluating Data Privacy and Security of AI Providers:

You are a data privacy and security expert specializing in AI vendor assessment for nonprofits.

I need your help to develop a set of questions to evaluate the data privacy and security practices of third-party AI providers, considering our context explained below.

# Our context #

> Our mission:

> Our challenges:

> Our goals:

> Software that we use:

> Ethical considerations:

# Requirements #

Please develop a list of questions that cover:

1. Data storage practices.

2. Data access controls.

3. Encryption methods.

4. Compliance with relevant data privacy regulations (e.g., GDPR, CCPA).

5. Data retention and deletion policies.

6. Incident response and breach notification procedures.

7. Auditing and security certifications.

4. Checklist for Reviewing AI-Generated Content:

You are an ethics and quality assurance specialist for AI-driven content in nonprofits.

I need your help to develop a checklist for reviewing AI-generated content for potential biases, inaccuracies, and ethical concerns. Consider our context explained below.

# Our context #

> Our mission:

> Our challenges:

> Our goals:

> Software that we use:

> Ethical considerations:

# Requirements #

Please create a checklist that includes guidelines for:

1. Identifying potential biases in language and representation.

2. Verifying the accuracy and reliability of information.

3. Ensuring compliance with ethical guidelines and organizational values.

4. Implementing human review and oversight processes.

5. Documenting review findings and corrective actions.

5. Crisis Response Plan for AI-Related Incidents:

You are a crisis management expert specializing in AI-related incidents for nonprofits.

I need your help to outline a crisis response plan for AI-related incidents, such as data breaches or ethical missteps, considering our context explained below.

# Our context #

> Our mission:

> Our challenges:

> Our goals:

> Software that we use:

> Ethical considerations:

# Requirements #

Please outline a crisis response plan that includes:

1. Key elements for rapid response.

2. Procedures for clear and transparent communication with stakeholders.

3. Steps for effective remediation and damage control.

4. Roles and responsibilities of key personnel.

5. Protocols for internal and external communication.

6. Post-incident review and learning processes.Links

- AI Governance Framework for Nonprofits (great toolkit developed by Microsoft)

- Artificial Intelligence (AI) Ethics for Nonprofits (another good toolkit, by Nethope)

- Tools and guides for responsible AI (not specific for nonprofits, but useful anyway)

- A.I. Checklist for charity trustees and leaders (useful questions that you might want to ask in your organization)

- Responsible Prompting and Practices for Generative AI (brief Cheat Sheet by Beth Kanter)

- AIF360 & FairLearn (open-source tools for developers to improve fairness in AI systems)